Mimicproject is an online platform to build interactive creative programs with machine learning.

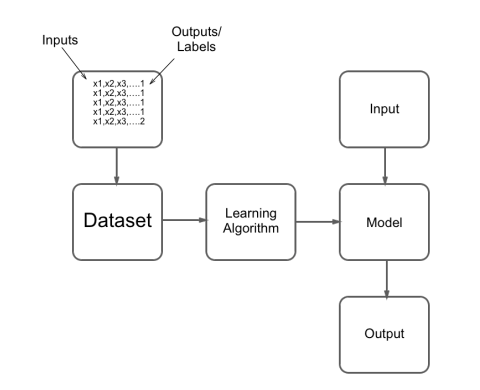

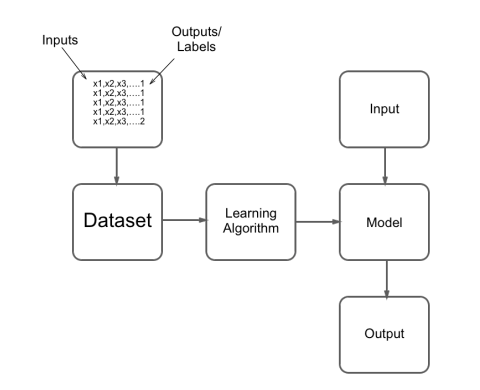

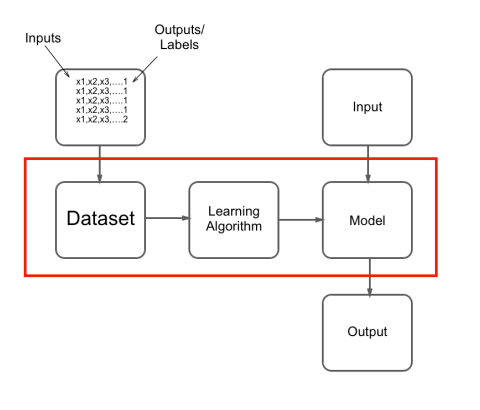

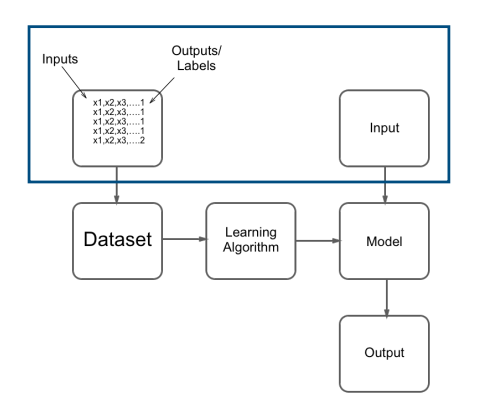

Its all about labelled data. Build models that predict new values, based on previous examples of input - output pairs.

We don’t have to program them ourselves, just provide labelled examples.

We show the learning algorithm pictures of cats, and pictures of dogs. It learns the difference between cats and dogs. The model can label new pictures.

We show the learning algorithm different dance poses, it learns the difference between them. We can use the model to trigger things in a performance.

Simple Object Recogniser

Demo: https://mimicproject.com/code/546bd3c7-31ad-c1aa-e8eb-989e91b1f2e9?embed=true

Building an image classifier, classifiers mean the labels are discrete. Background classes can be useful.

How many examples do we need? More examples = Better Model.

If we want our model to be able to distinguish between things based on a certain characteristic, we need to show it examples of that.

Representation is important.

MobileNet gives the probability of 1000 different objects being in the frame. All the pixel values would be too a huge number of features, the is better. Only works for things that are in the original 1000 object dataset (ImageNet).

This is a complex demo: https://mimicproject.com/code/c6bffe36-65db-4d3d-d162-3ae7a52bbc32

Why doesn’t my model work?

Goal not specific enough

In a famous example, a researcher is training a small whelled robot to avoid objects based on sensors on the front. The requirements for success? Reduce collisions. After training the control system, they upload it to the machine and wait expectantly. Nothing happens. They check the batteries and everything seems fine. It becomes apparent that the algorithm has decided the best strategy to avoid collisions is to just remain perfectly still.

Updates the rules and sets off to train a system that doesn’t have collisions, and also moves around a lot. Unfortunately, all they manage to create is a robot that spins around on the spot.

Data not specific enough

A model trained to identify cancerous growths actually learnt to identify rulers, as these were often present alongside the tumours.

Image description models will often report sheep in pictures containing grass, even when there are none. Because all of the pictures of sheep it had seen were surrounded by fields, and our model had learnt to identify the scenery, not the animal.

Imbalanced data

If you have lots of examples of one class, and few of another, sometimes the model will just learn to pick the common class (allowing high accuracy) and not have to learn anything about the problem.

Not all bad

Researcher Adrian Thompson was using machine learning techniques to iteratively build circuits using real live hardware. The solution he arrived at seemed to make no sense, with 5 logic gates seemingly disconnected from the rest. After much head scratching, it became apparent that the algorithm had made use of a previously unknown property of silicon to reach an unorthodox, but fully functional solution.

Machine learning may not take the path you planned and this may end up with something you did not want, or it may end up finding a great solution you would not have arrived at in a million years.

Making an instrument

Demo: https://mimicproject.com/code/7d93308a-83e1-ead3-180a-d84900ffe874

A great example of using camera classification to control audio output is Anna Ridler’s Drawing with Sound. Here, she trains a model to recognise common forms she draws. As a feed from a camera embedded in her glasses is analysed as she paints, recordings from sonatas are triggered.

Making a project

Once you have logged in MIMIC, you can select the my projects dropdown from the main navigation bar and click the plus button to make a new document.

1 |

|

HTML stands for Hyper Text Markup Language, is the standard markup language for creating Web pages, describes the structure of a Web page, consists of a series of elements, HTML elements tell the browser how to display the content, elements label pieces of content such as “this is a heading”, “this is a paragraph”, “this is a link”, etc.

All HTML documents must start with a document type declaration: <!DUCTYPE html>.

The HTML document itself begin with <html>and ends with </html>.

The visible part of the HTML document is between <body> and </body>.

Having made a new project, you will be taken to the main editor and viewer for that project. On the left is the output of your program, and on the right is the code editor. The code editor can be dragged across to be smaller or larger.

The root document is a HTML page. If you are familar with web development, think of it as an “index.html”. As such you can build up your HTML elements here and include Javascript in.

p5.js and Functions

Functions in Javascript can aloso be stred in variables.

And like Python, they named arguments in around brackets

1 | var add = function(num1, num2) { |

Demo: https://mimicproject.com/code/ff7ffb97-ff0f-ae93-0204-8c410e32182b

Two most important parts of a p5.js sketch are:

- function

setup(){}- called once at the start - function

draw(){}- called on every frame

Variables are where we store thing.

In Python, variables have no prefix, we just put the name.

In Javascript, you need to prefix with var or let.

p5.js has lots of built in functions for drawing shapes. https://p5js.org/zh-Hans/reference/, here we see how we can store the positions and dimensions of shapes we want to draw in variables.

FrameCount stores a counter that goes up every frame.

1 | if(a>3) { |

We can use stroke() and fill() to colour the middle and borders of our shape.

- ‘rgb(0,0,0)’

We can use the conditional (if statement) to pick the colour based on the mouse position.

- mouseX

1 | for(var i=0; i<5; i=i+1) { |

Arrays in Javascript are the same as Python:

1 | var a = [1,2,3,4,5] |

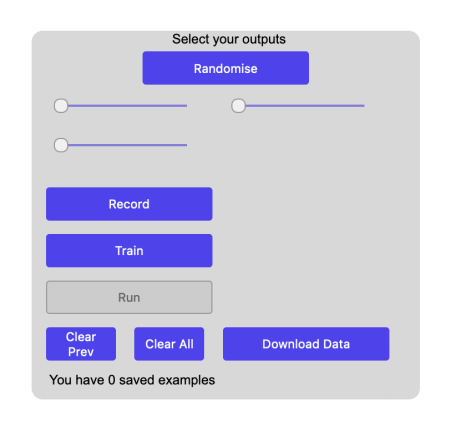

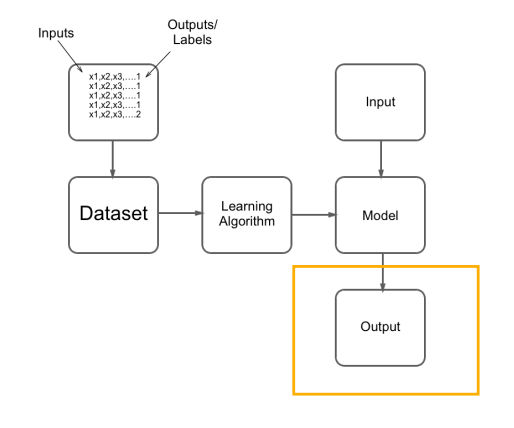

We have a library, Learner.js, that lets you add all this functionality into a project with minimal fuss.

Gives you a nice GUI to handle all the main machine learning functionality. All you have to do is hook up the inputs and outputs.

1 | var learner = new Learner(); |

1 | learner.newExample( |

It is important to note that one piece of code above serves two purposes. If you are recording, every time you add a new example, the pairing is stored in the dataset. If you are running, just the input is used and given to the model, which then provides a new output based on the data it has seen.

1 | learner.onOutput = (output)=> { |

Body Particles

You will also note an additional feature of Learner.js here as there is a slight delay(three seconds) in recording allowing you to get into position. The recording also stops after three seconds and both these time limits can be controlled in the code. There is an important reason for why this is a useful tool when recording in your own datasets, especially in the case of body tracking camera input.

If recording begins immediately when you have pressed the button on your computer, it may take you a few video frames to adopt the position for that class. This means there will be several examples in your dataset which are not information about your particular body parts when in a particular class, but just how your body happens to be as you enter that position. Having this mislabelled, erroneous data in will make the learning algorithms job harder, and will almost certainly make your model’s performance worse.

About this Post

This post is written by Siqi Shu, licensed under CC BY-NC 4.0.